Project NOVA: Building My Personal AI Orchestration System

Introduction: The USB-C of metaphors

When I first heard the concept of Model Context Protocol (MCP) servers at the end of 2024, I found them hard to wrap my head around. First of all, the name was confusing. Everyone seemed to be running the software inside of Claude desktop – how are they “servers”? If anything, wouldn’t the applications they’re controlling be the servers? Wouldn’t they be clients?

Even worse was hearing all the influencers and even Anthropic themselves try to explain them as “the USB-C port for AI applications”. What??? — You mean one of the most vague standards of all time? The one where, if the cable is not labeled, you don’t know if you’re going to get Thunderbolt 4 speeds or USB 3 speeds (or USB 3.2 gen 2 or USB 3.2 gen 2×2) or any speeds at all? Ok… so in this USB scenario, is the MCP server the host or the client device? Does it do USB-OTG?? Needless to say, this metaphor was not helpful for me.

The “Aha!” Moment

It wasn’t until I watched a video of someone controlling Ableton Live from Claude’s chat interface that it clicked. At that moment I knew – I never wanted to interact directly with software ever again. But we’ll get to that!

At this moment I’d just like to share a couple of metaphors that I find are more appropriate for describing MCP servers. They’re essentially API’s for other API’s (in the sense that the apps you want to control need api’s of their own). You could even describe them as a sort of condom for your LLMs when they need to slide into dirty servers — anything but “the USB-C port for AI”.

Having now deployed over two dozen of these in my homelab, I can unequivocally say that they are indeed servers — not just “npx” commands that you run in Claude desktop, as it might seem. You can spin them up in docker, the same way you would spin up a Jellyfin server or a Plex server (although some of them require some tinkering first).

They’re real and they’re spectacular.

Enter n8n

Once I found out you could dockerize them and run them on your LAN, it was off to the races. As a self-proclaimed nerd and audio engineer by training, I needed to be able to control Ableton Live from a chatbox too! And Bitwig. And literally every app I interact with on a daily basis. But how would I use them?

I run Nixos on both my desktop and laptop, so there’s no Claude app for me. (Sidenote – I do have it running in a container via Wine but it’s a bit too janky for me.) Also, I generally try to install as little software as possible. I like to keep my devices clean and install software on servers, then access apps from a browser. So even if Claude made an app for Linux, I would still prefer a web-based solution.

Up until a few weeks ago, I had never used n8n. I had always thought of it as something I’d try one day as a sort of self-hosted Zapier alternative. When they put out their self-hosted-ai-starter-kit, it further piqued my interest. The thought of a tight integration with Ollama and n8n seemed powerful. I was sold.

I retired my original Ollama instance and spun up n8n’s toolkit. This turned out to be a great call because soon after, nerding-io dropped their mcp client node, and now, I too, could finally have a robot control my recording sessions! And Home Assistant. And 23 other apps (and counting!).

Enter NOVA

Around my 20th MCP server deployment, as I was swimming in docker files, system prompts, and n8n json files, it dawned on me: “maybe some other nerds might enjoy these files…”

So I gave it a name and here we are. Project N.O.V.A. – the Networked Orchestration of Virtual Agents. What started as an actual, no-joke desire to have an LLM do my audio engineering, has morphed into a desire to build what one might call “digital God“. Too much? Fine — micro-AGI. It really feels that powerful.

What is Project NOVA?

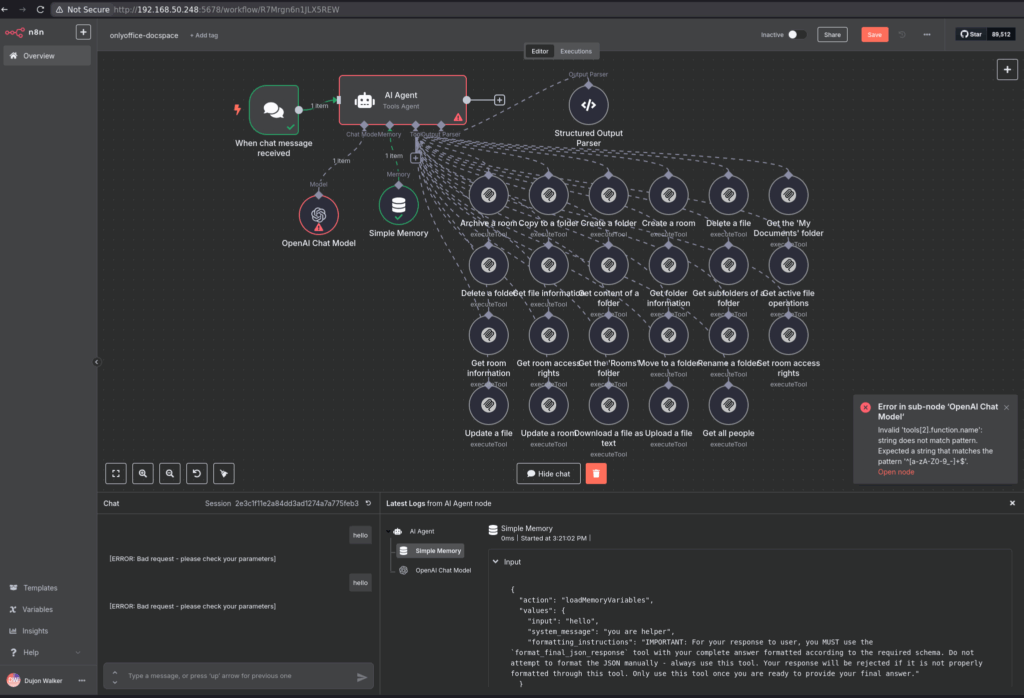

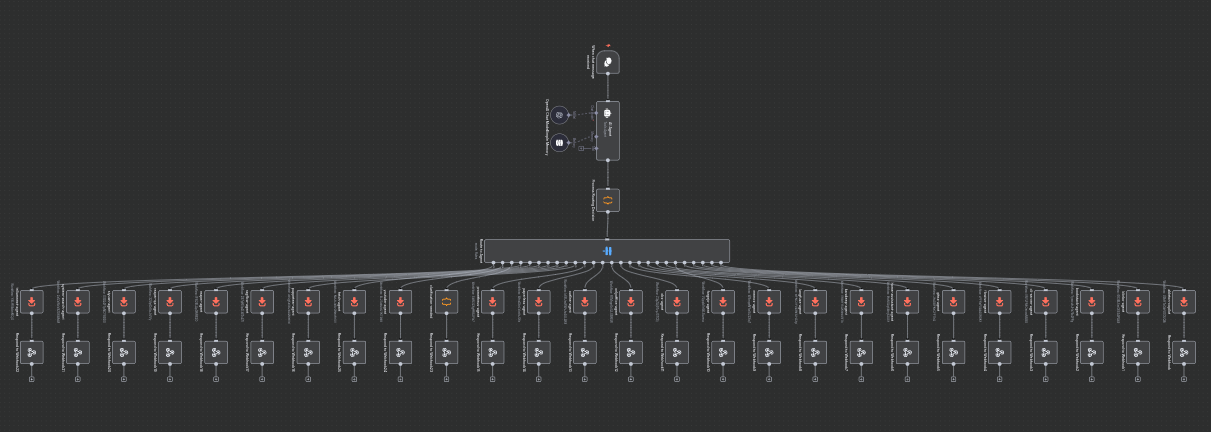

Project NOVA is an AI assistant ecosystem built on n8n workflows that leverages MCP servers to connect an LLM to dozens of specialized tools. The architecture uses a central “router agent” that analyzes requests and delegates them to the appropriate specialized agent, each controlling a specific application or service.

With NOVA, I can simply chat with an AI and have it control nearly anything in my digital world – from managing my notes and documentation to controlling my home automation, from helping with music production to searching through my repositories.

The Router: Mission Control

At the heart of NOVA is a router agent that acts as the central intelligence. When I ask something like “find my notes about that song I was working on last week,” the router:

- Analyzes my request to determine intent and domain

- Selects the most appropriate specialized agent (in this case, perhaps TriliumNext or Memos)

- Forwards my request to that agent along with relevant context

- Returns the specialized agent’s response

This architecture means I never need to specify which tool to use – I just ask, and the system figures out the rest.

My Homelab Setup

One of the more interesting aspects of this project is how it’s distributed across my homelab environment. It’s comprised of 6 VMs, spread across 3 physical machines:

| Server Host | Purpose | MCP Servers |

|---|---|---|

| Main VM | Primary MCP host | Most MCP servers including TriliumNext, BookStack, Memos, Reaper, OBS, Gitea, Forgejo |

| File/GPU Server | File access & GPU-accelerated services | Paperless, Karakeep, System Search (has RTX 2070 available to containers; also hosts Ollama and n8n) |

| Isolated VM | Security-isolated services | CLI Server |

| Home Automation VM | Home automation | Home Assistant |

| OnlyOffice VM | Document collaboration | OnlyOffice DocSpace |

| Langfuse VM | Prompt management | Langfuse |

The distribution wasn’t random – specific requirements determined each server’s location. The main VM hosts the bulk of MCP servers for simplicity, but certain servers needed special accommodations:

- File-intensive MCP servers landed on the GPU server which already had all my SMB shares mounted and ready to go

- GPU-dependent services also went to the same server for access to its RTX 2070

- The CLI Server agent needed its own isolated VM since it has access to the docker stack and can spin up its own containers – solitary confinement for this superhero

- Some MCP servers like OnlyOffice and Langfuse are co-located directly with their respective applications on dedicated VMs

Additionally, some MCP servers (usually those that control creative applications) need to be on the same device as the application itself for proper communication. Having multiple machines provided flexibility to accommodate these varied requirements.

Challenges I Faced

Building NOVA wasn’t without its difficulties. Here are some issues I came across when putting it all together:

1. Getting the LLMs to Use the Tools

The non-deterministic nature of LLMs really shone through on this project. Even though the models tested (mostly gpt4.1-mini and llama3.1) were trained for tool use, they won’t always necessarily reach for the tools they’re presented with unless they are strongly nudged. And by that I mean, very explicit system messages. You really have to spell out which tools are available – even the exact name you gave them. For example, if you named one of your tools “list-all-tools”, you need to tell the model that “list-all-tools” is the way to list all tools. Luckily, the way n8n allows you to put instructions (and examples) at the very heart of each agent node, I found these issues easy enough to overcome.

2. Docker Containerization

Containerizing all the MCP servers was time-consuming but necessary for maintainability. Each agent required custom Dockerfile and docker-compose configurations to ensure they could communicate properly with their respective applications and the central router.

3.Transport Protocol Conversion

One of the biggest hurdles was that most MCP servers use STDIO (Standard Input/Output) for communication, but n8n works better with Server-Sent Events (SSE). I had to use Supergateway to convert between these protocols, which added complexity but ultimately made everything work more smoothly.

4. Resource Management

Running all these services simultaneously can be resource-intensive. I had to carefully allocate CPU and memory across my homelab to ensure everything runs smoothly, especially when multiple agents are active.

What I Would Do Differently

If I were starting this project again today, I would:

1. Use a Unified Management Layer

Something like MetaMCP would be ideal – a unified middleware that could manage all the individual MCP servers. Unfortunately, this may not be viable for this use case (see above multiple hosts needed), but it’s something I’m watching closely. Centralization would significantly simplify deployment and maintenance.

2. Standardize Agent Workflows

I would create a more consistent template for the n8n workflows upfront. As it stands, each agent has slightly different workflow patterns (community node vs official node), which makes maintenance more challenging than necessary.

3. Start with SSE Transport from the Beginning

I initially tried to make STDIO work directly with n8n, which cost me valuable time. Starting with SSE as the standard transport protocol would have saved significant development time.

4. Focus on Fewer, More Powerful Agents

Rather than implementing so many overlapping tools (particularly in the note-taking category), I would focus on a smaller set of more versatile tools. That said, the testing phase was valuable for understanding what works best in different contexts.

Life With NOVA: Practical Applications

Some of the applications I’m most excited about include:

Knowledge Surfacing

With multiple note systems for different purposes (some for quick thoughts, others for structured knowledge), I used to waste time remembering where I stored something. Now I just ask: “Where are my notes about that EQ technique?” and NOVA searches across all systems.

Creative Workflows

As a musician and producer, this was my original motivation. I can now tell NOVA things like:

- “Create a new recording project with drum, bass, and guitar tracks”

- “Create a simple beat in 6/8 with emphasis on toms”

- “Let me hear that last vocal take”

Home Automation

Natural language control without remembering entity names is a game-changer:

- “Turn on the ambient lighting in the living room”

- “What’s the temperature in the studio?”

- “Turn off all lights except the hallway”

Development Assistance

As a solo developer, version control and code organization becomes much simpler:

- “Create a new repo for my audio AI project”

- “Find all the places I used that docker image in Gitea”

- “What changes did I make to my Nixos config last month?”

- “Show me the commit history for the config files”

I’m still in the early stages of exploring all these possibilities – stay tuned for an upcoming YouTube video where I’ll demonstrate some of the most impressive use cases in action!

Exciting Future Developments

There are several new developments I’m particularly excited about:

1. New MCP Servers on the Horizon

I’m looking forward to deploying several new MCP servers, including:

- A ComfyUI agent for AI image generation

- A Bitwig Studio MCP server

- An ESXi agent for VM management

- A WordPress agent for content management

- A Hetzner agent (though having my credit card on the line is another frontier entirely!)

2. OpenWebUI Integration

Integrating with OpenWebUI will be a game-changer, enabling voice interactions and file sharing capabilities. This will make NOVA more accessible and powerful for daily use.

Conclusion

Building Project NOVA has been a rewarding journey that’s transformed how I interact with my digital environment. While there have been challenges, the ability to simply ask an AI assistant to perform tasks across dozens of specialized tools has been genuinely transformative.

If you’re interested in building your own NOVA system or just want to explore the components, check out the GitHub repository where I’ve shared all the tools and instructions needed to create your own networked AI assistant ecosystem.

I’d love to hear about your experiences with MCP and AI agents – feel free to share in the comments below!